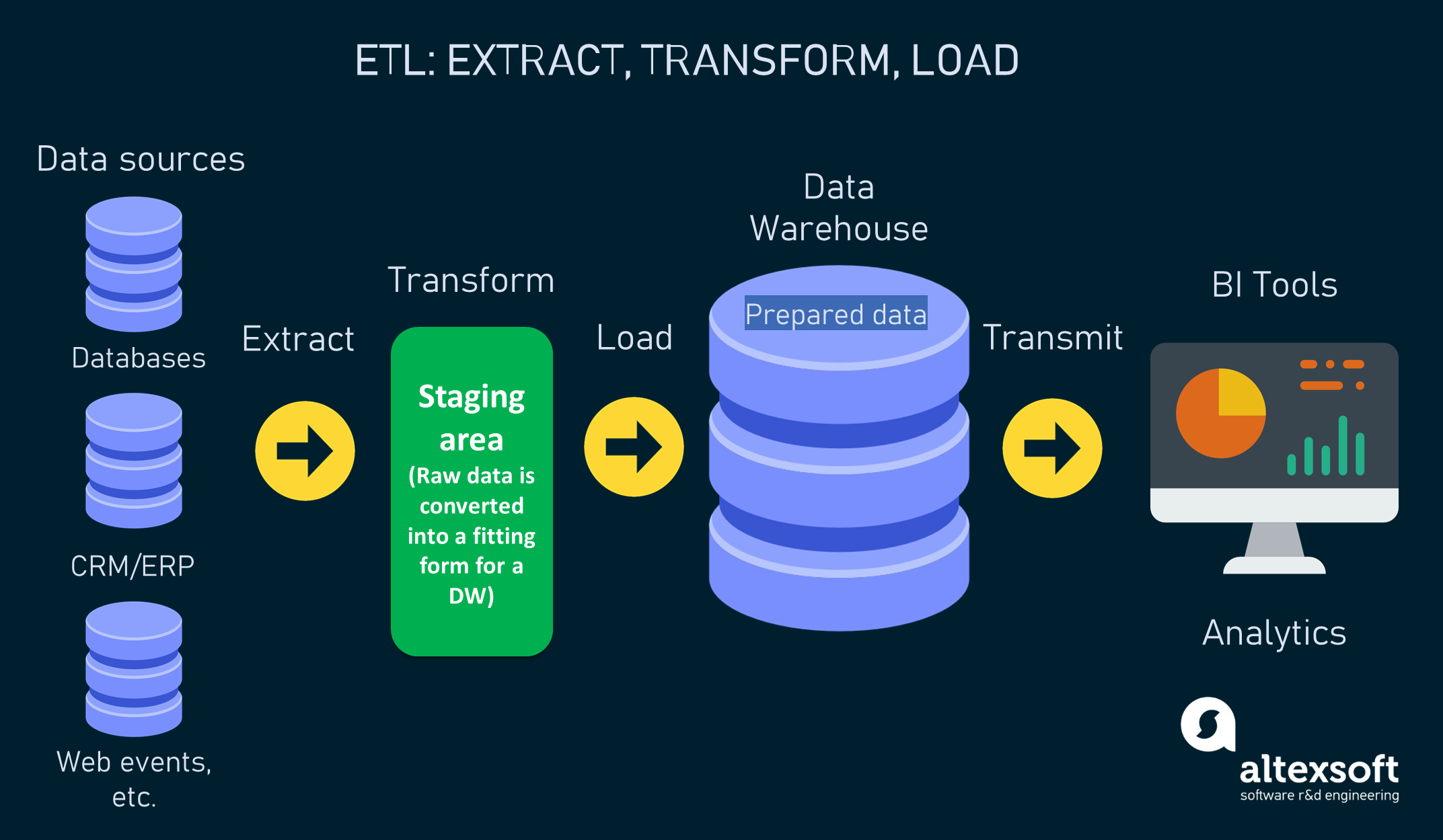

They are updated independently of the Apache Airflow core. Read the documentation » Providers packages Providers packages include integrations with third party projects. Our python script’s contents are reproduced below (to check for syntax issues just run the py file on the commandline): # Apache Airflow Apache Airflow Core, which includes webserver, scheduler, CLI and other components that are needed for minimal Airflow installation. A Complete Guide to Principal Component AnalysisPCA in Machine Learning. Starting in Airflow 2.0, trying to overwrite a task will raise an exception. Building a Production-Level ETL Pipeline Platform Using Apache Airflow Data. Machine learning workflows: Airflow can be used to orchestrate and manage machine learning workflows, such as model training, evaluation, and deployment. Both platforms provide tools for data engineering, machine learning. Users/theja/miniconda3/envs/datasci-dev/lib/python3.7/site-packages/airflow/models/dag.py:1342: PendingDeprecationWarning: The requested task could not be added to the DAG because a task with task_id create_tag_template_field_result is already in the DAG. Airflow also allows data science teams to monitor ETL processes, ML training. INFO - Filling up the DagBag from /Users/theja/airflow/dags # visit localhost:8080 in the browser and enable the example dag in the home pageįor instance, when you start the webserver, you should seen an output similar to below: (datasci-dev) ttmac:lec05 theja$ airflow webserver -p 8080 # start the web server, default port is 8080 # but you can lay foundation somewhere else if you prefer

From the quickstart page # airflow needs a home, ~/airflow is the default,

#Airflow etl machine learning install

Lets install the airflow package and get a server running.

Orchestration using ECS and ECR - Part II

0 kommentar(er)

0 kommentar(er)